The ability to scale your applications and services efficiently and seamlessly is crucial for accommodating growing user demands and ensuring optimal performance.

One solution that has gained immense popularity in the world of container orchestration is Azure Kubernetes Service (AKS).

In this blog, we will explore how AKS can help you achieve scalability nirvana for your applications, ensuring they can handle increasing workloads with ease.

Understanding the Essence of Scalability

Scalability is the capability of a system, application, or service to handle increasing workloads without compromising its performance. Imagine a website that experiences a sudden surge in traffic due to a viral social media post or a retail application during a holiday sale. Scalability ensures that these systems can seamlessly adapt to increased demand without crashing or slowing down, leading to a positive user experience and potential business growth.

Scalability is not limited to adding more hardware resources; it also involves optimizing software architecture and infrastructure to handle varying levels of traffic efficiently. In essence, it’s about designing your technology stack to be flexible and responsive to changes in demand.

The Rise of Containerization and Kubernetes

Containerization: A Paradigm Shift

Containerization has transformed the way applications are developed and deployed. Containers package an application along with its dependencies, ensuring that it runs consistently across different environments, from development laptops to production servers. This encapsulation simplifies application management and eliminates the infamous “it works on my machine” problem.

With containers, you can create a predictable, portable, and isolated environment for your applications, making them easier to scale and maintain.

The Power of Kubernetes

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform developed by Google. It takes containerization to the next level by automating many aspects of deploying, scaling, and managing containerized applications. Kubernetes abstracts away the underlying infrastructure complexities, providing a unified platform for container orchestration across various cloud providers and on-premises environments.

Key Kubernetes features include:

- Container Orchestration: Kubernetes manages the deployment, scaling, and orchestration of containers, ensuring they run reliably.

- Load Balancing: It distributes incoming network traffic across multiple pods (instances of an application) to enhance availability and reliability.

- Auto Scaling: Kubernetes can automatically adjust the number of running pods based on resource utilization or custom metrics, ensuring optimal performance.

- Rolling Updates: Rolling updates allow you to deploy new versions of your application without downtime by gradually replacing old pods with new ones.

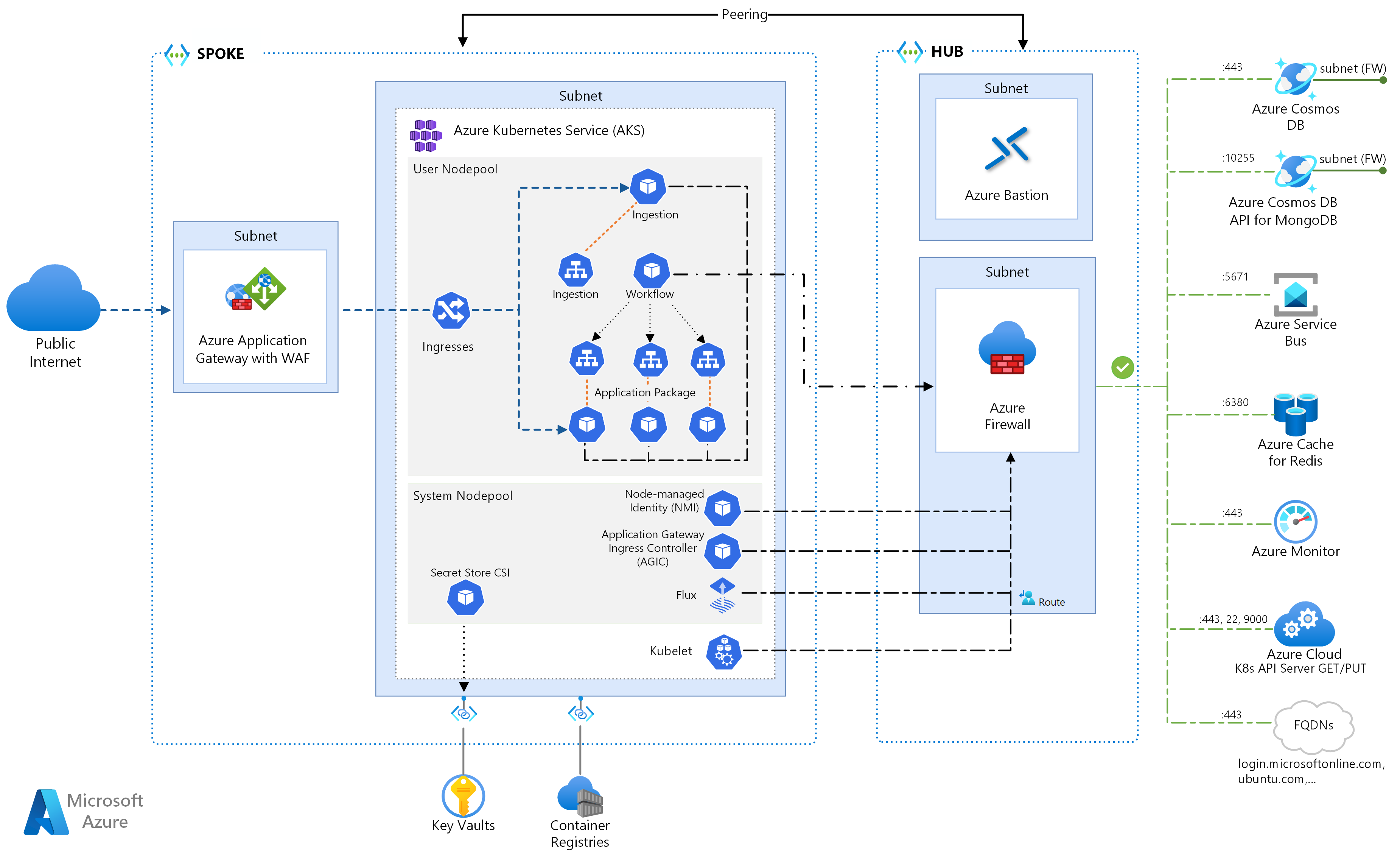

Introducing Azure Kubernetes Service (AKS)

Azure Kubernetes Service (AKS) is a managed Kubernetes service offered by Microsoft Azure. It’s designed to simplify the deployment, management, and scaling of containerized applications using Kubernetes. Let’s explore how AKS can help you achieve scalability nirvana

Effortless Cluster Management

Setting up and managing a Kubernetes cluster manually can be complex and time-consuming. AKS abstracts these complexities by handling cluster provisioning, scaling, and upgrades for you. With a few clicks or command-line instructions, you can have a fully functional Kubernetes cluster up and running in Azure.

AKS leverages Azure’s robust infrastructure to provide a reliable and secure environment for your containers, allowing you to focus on deploying and scaling your applications.

Auto Scaling

One of the standout features of AKS is auto scaling. This capability allows you to define scaling policies based on metrics such as CPU and memory utilization. When your application experiences increased traffic, AKS can automatically add or remove nodes (virtual machines) from the cluster to maintain optimal performance.

For example, if your online store encounters a traffic spike during a flash sale, AKS can scale up your application instances to handle the load, and when the traffic subsides, it can scale down to save resources and reduce costs.

Integrated Monitoring and Logging

To effectively manage scalability, you need real-time visibility into your cluster’s performance and the health of your applications. AKS seamlessly integrates with Azure Monitor and Azure Log Analytics, Microsoft’s monitoring and telemetry services.

With these tools, you can:

- Monitor the health and performance of your applications and infrastructure.

- Set up alerts based on custom thresholds to proactively respond to issues.

- Gain insights into resource utilization, container performance, and application logs.

- This integrated monitoring and logging ecosystem ensures that you can detect and address scalability-related issues promptly.

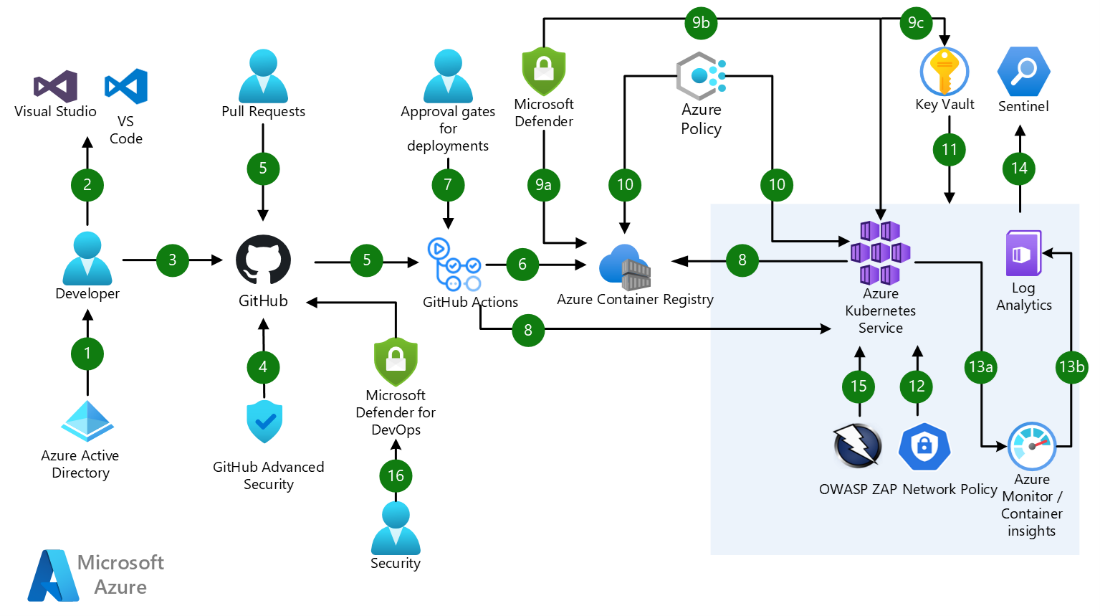

DevOps Integration

Scalability isn’t just about infrastructure; it’s also deeply tied to your development and deployment processes. AKS offers robust integration with popular DevOps tools like Azure DevOps, Jenkins, and GitHub Actions.

- By incorporating AKS into your DevOps pipelines, you can:

- Automate the building and deployment of containerized applications.

- Ensure consistent and reliable deployments to your AKS cluster.

- Continuously improve and optimize your scalability solutions as part of your CI/CD processes.

- This integration streamlines your development and operations workflows, enabling rapid and efficient scaling of your applications.

Horizontal Pod Autoscaling

AKS supports horizontal pod autoscaling, a Kubernetes feature that dynamically adjusts the number of pods running in your cluster based on demand. When you define scaling policies and metrics (such as CPU usage or custom application metrics), AKS can automatically create additional instances of your application (pods) to handle traffic spikes.

For instance, if your e-commerce website experiences a sudden influx of shoppers during a holiday season, horizontal pod autoscaling ensures that your application can accommodate the increased load, providing a smooth shopping experience for customers.

Cost Optimization

While scalability is essential, you also need to consider cost implications. AKS offers several ways to optimize costs while ensuring efficient scalability:

- Resource Limits and Requests: You can define resource limits and requests for your pods. This ensures that your containers only consume the resources they need, preventing resource waste and reducing costs.

- Node Pools: AKS allows you to create multiple node pools with different virtual machine sizes. You can assign workloads with varying resource requirements to the appropriate node pool, optimizing resource utilization.

- Auto Pausing: With Azure Logic Apps and Azure Functions, you can implement auto-pausing for development and testing environments during non-working hours, reducing resource consumption and costs.

To delve deeper into AKS and Kubernetes, consider exploring the following external references:

Azure Kubernetes Service (AKS) Documentation: Microsoft’s official documentation provides comprehensive guidance on setting up and managing AKS clusters, including advanced features and best practices.

Kubernetes Official Website: The official Kubernetes website offers extensive resources, including documentation, tutorials, and community insights, to help you master container orchestration with Kubernetes.

Achieving Scalability in Nirvana with AKS

In summary, Azure Kubernetes Service (AKS) is a powerful solution for achieving scalability nirvana for your applications. It simplifies Kubernetes cluster management, offers robust auto scaling capabilities, integrates monitoring and logging, and seamlessly fits into your DevOps workflows. With AKS, you can confidently scale your applications to meet increasing workloads, optimize costs, and maintain peak performance.

To explore how AKS can benefit your specific use case, don’t hesitate to reach out to iSmile Technologies, where our experts can provide tailored guidance and solutions.

By embracing AKS, you can leave behind scalability challenges and embark on a journey toward seamless growth and performance for your applications.

Start Scaling with AKS Today

If you’re ready to experience the benefits of AKS firsthand, contact ISmile Technologies today. Our expert team is here to assist you in harnessing the power of AKS to achieve scalability nirvana for your applications.