You might have heard the expression that “Data is the new oil”, that’s because data is key for any business decision system of an organization. But then, the required data is not stored in one place like a regular & vanilla RDBMS. Normally, the data remains scattered across various RDBMS & other data silos. So, it’s quite common to come across situations where data is siting across different kind of sources, and the DBA needs to find a solution to pull all relevant data into a centralized location for further analysis.

The Challenge

Consider a particular scenario – we have some sales data in AWS Postgres RDS Instance and that data has to be replicated into some tables in their Azure VM with MS SQL Server. But the challenge is heterogeneous replication is marked as deprecated, and it doesn’t work anywhere but only between SQL Server and Oracle. No Postgres allowed in the batch. Also, native heterogeneous replication is not found on the Postgres side. We need third-party tools to achieve this or work manually in HIGH effort mode, creating a CDC (change data capture) mechanism through the use of triggers, and then getting data from control tables. It sounds fun to implement, but time is of the essence. Getting such a solution on ground would take quite some time. Therefore, another solution had to be used to complete this data movement.

A Possible Solution

The Big Data scenario has many tools that can achieve this objective, but they don’t have the advantages that Kafka offered.

Initially, Apache Kafka was designed as a message queue like app (like IBM MQ Series or even SQL Server Service Broker). The best definition of it is perhaps – “Apache Kafka is more like a community distributed event streaming technology which can handle trillions of events per day”. But a streaming system must have the ability to get data from different data sources and deliver the messages on different data endpoints.

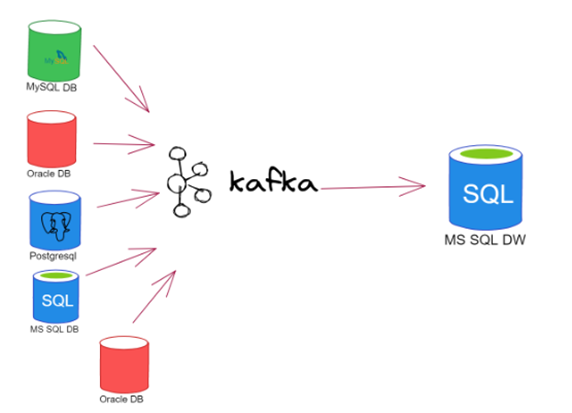

There are many tools to achieve this – Azure Event Hubs, AWS Kinesis, or Kafka managed in Confluent Cloud. However, to show how things work under the hood, and since they have similar backgrounds (Apache Kafka open-source code) we have decided to show how to set up the environment from scratch without any managed services for the stream processing. So, the picture would look something like this:

The basic Apache Kafka components have been enlisted here:

Message: This is the smallest data unit in Kafka. From the perspective of a DBA, a message is simply a row in a database table. In the Kafka, a message is an array of bytes.

Schema: It’s a structure that accompanies messages, to make them understandable by the consuming applications.

Topics: Messages categories are Topics. In the DBA world, that is like a table. Messages are read in order from beginning to end in an append-only format.

Producers and consumers: This idea is very similar to SQL Server Replication publishers and subscribers. However, Kafka has been provided the ability to handle multiple producers and consumers simultaneously.

Brokers and clusters: A single Kafka server is known as “broker”. It gets messages from the producers, scripts an offset to them, and helps in persisting them on disk. It also serves consumers as they request for messages. In general, in a production environment, brokers are a cluster’s part.

Connectors: Apache Kafka has been provided with many connectors for handling consumers and producers. Different connectors are available either free of charge or on purchase.

So, Kafka is the choice for the task at hand, and we’re going to create the environment for the data movement from scratch.

The Architecture

To achieve the streaming functionality and ensure data flow we will use a set of tables from PostgreSQL DB as source, and a set of tables from SQL Server as destination, while Apache Kafka will be used as the “replication mechanism”. For connecting to Postgres, and detecting its data changes (CDC) a connector plugin is also required. Therefore, we will use Debezium as it is also an open-source and built from the ground up to go along with Kafka as easily as possible.

Remember for achieving the required goal (replicating data from Postgres to SQL Server using Kafka) we can use a Kafka-as-a-service product in any of the available cloud providers. But, to fully understand that how the architecture works, and also, simply as a showcase, we have made our mind not to use any SaaS related product, nor Docker. Now, we’re ready to proceed.

Get to the task:

Let’s start by installing our first VM. We are using a Windows PC to do all the testing, so we are creating the VM in Hyper-V. You can use whichever hypervisor you want. We have used Red Hat Developer edition 8.5 as OS. For that we need to subscribe to the Red Hat Portal.

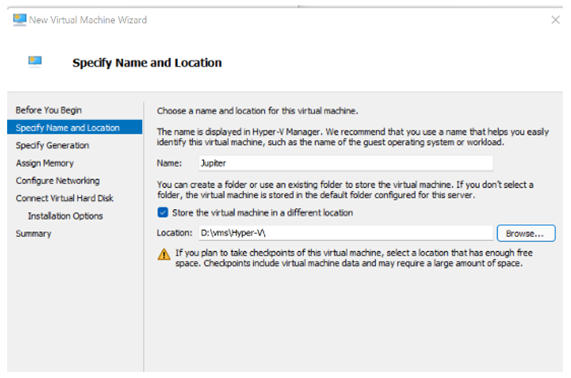

1. We will create a VM called “Jupiter” which will act as our Postgres and Kafka server.

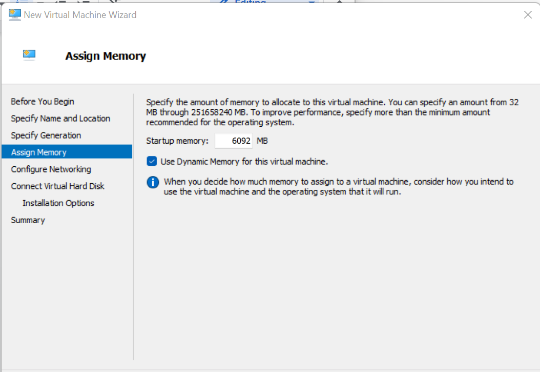

2. As far as Hyper-V is concerned, it will be Gen 2. Ensure that you allocate enough memory so that you can do proper testing. In our case, we are setting it to 6 GB – dynamic, which means Hyper-V won’t dedicate that memory entirely to the VM.

3. For the sake of networking, we are adding a virtual ethernet adapter which is attached to the physical nic of the host, which means your wi-fi router will assign an IP address directly to the VM.

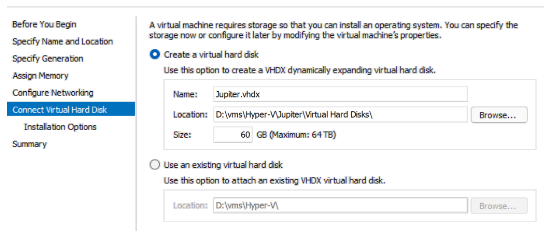

4. As we have a small data sample, the space of 60 GB is quite enough.

5. Ensure to attach the Red Hat iso in this way you can install the OS shortly.

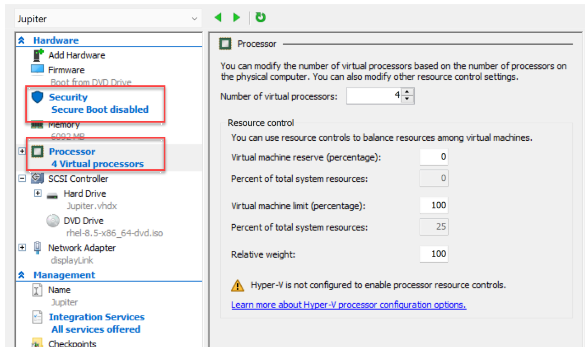

6. Also ensure that you set a reasonable amount of virtual cpus, and disable Secure Boot for the time being.

7. Enable all guest services.

8. Now proceed to installing the OS, and Starting the VM. Select its console window and hit any key to start the install process.

9. Choose the preferred installation language.

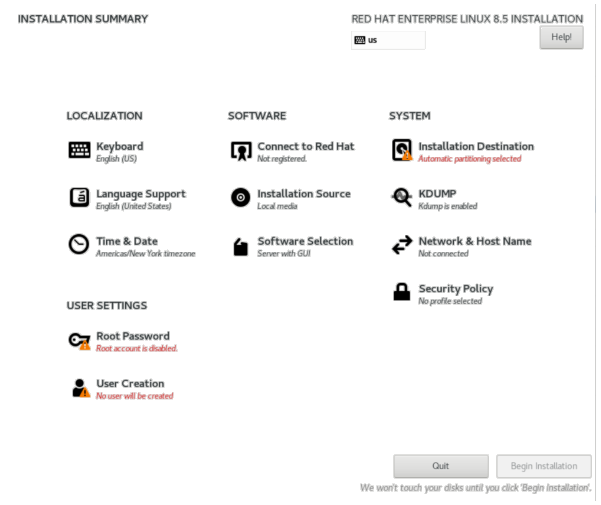

10. In this screen, we need to adjust the following: Partitioning is set to Auto, Kdump is disabled, enable Network, Root Password is set, create a user. After doing all the desired settings, we will click ‘Begin Installation’.

11. It will take some time to complete the OS installation. Then, we will reboot.

12. During the configuration phase, you will have to accept the License terms.

13. After that, click “Finish Configuration”.

14. You need to get your new VM registered with your Red Hat account, otherwise, you can’t download the necessary packages for the environment to work properly. Click ‘Register’, and provide your username (NOT EMAIL) and password for your Red Hat account.

15. At this stage, we can afford to ignore the next screens, and we don’t need to sign on to any other online account.

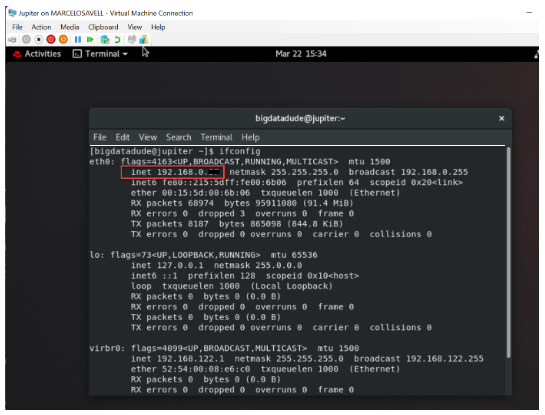

16. Now the GUI doesn’t need to be used anymore. We can use any Terminal-like app in Windows to connect with our VM. Now get the IP address for the VM you just installed. It can be done by opening a terminal window and typing: “ifconfig”.

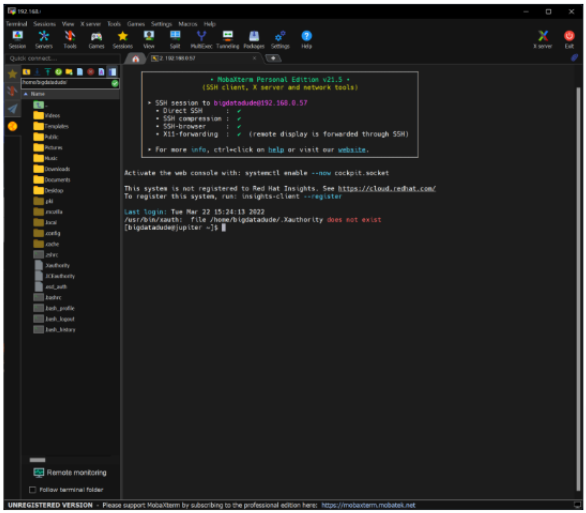

17. MobaXTerm is quite useful and has a very intuitive UI. After connecting, you’ll see a Windows Explorer-like side window, and the main bash terminal where you can input the required commands.

We have the most hands-on experience in the Kafka technology.

Talk to our Kafka experts & experience the benefit of our methodological approach. Schedule a free assessment today.