HashiCorp and Microsoft have forged a strategic partnership to develop Terraform modules that are meticulously aligned with Microsoft’s Azure Well-Architected Framework and industry best practices. In previous discussions, we explored the construction of robust and secure Azure reference architectures using HashiCorp Terraform and Vault and the effective management of post-deployment operations. This piece delves into the collaborative efforts of HashiCorp and Microsoft to furnish you with the requisite building blocks for accelerating AI adoption on Azure securely, repeatedly, and cost-effectively via Terraform. Our focus lies in leveraging Terraform to provision Azure OpenAI services.

Navigating the AI Landscape

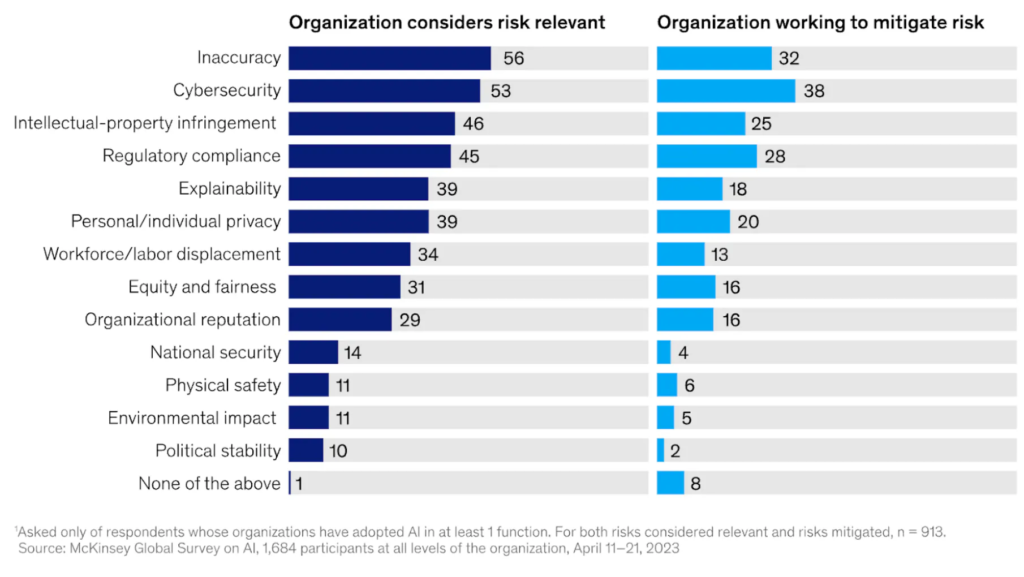

McKinsey’s 2023 report underscores the evolving prominence of AI, shifting from a niche concern among tech specialists to a pivotal area of focus for C-suite executives. Notably, nearly a quarter of surveyed C-suite executives personally engage with generative AI tools for their work, emphasizing the strategic significance of AI initiatives. The report also underscores the associated risks, including cybersecurity vulnerabilities, compliance issues, re-skilling imperative, and potential inaccuracies inherent in AI systems.

In this exploration, we employ Terraform to address some of the challenges highlighted, particularly those related to security and compliance. Moreover, we delve into key AI use cases and their deployment paradigms on the Azure platform.

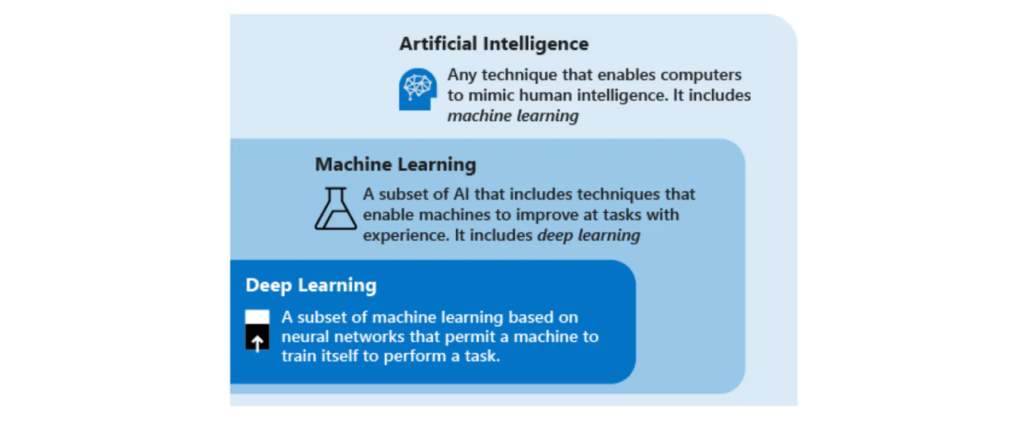

Understanding AI Fundamentals

Before delving into AI adoption, it’s imperative to grasp the interplay between its foundational components: machine learning (ML) and deep learning (DL). Generative AI, a subset of AI propelled into the spotlight by OpenAI’s ChatGPT, resides within deep learning. This facet encompasses emulating human-like behavior and creating novel content, marking a pivotal advancement in AI capabilities.

Azure OpenAI and AI Services

Microsoft’s Azure AI services encompass a comprehensive suite of off-the-shelf and customizable AI tools falling under the purview of machine learning. These services, spanning natural language processing, computer vision, and speech recognition, empower organizations to derive actionable insights from data, automate processes, enhance customer experiences, and make informed decisions through intelligent algorithmic integration.

Azure OpenAI Service, a focal point of our discussion, centers on the application of large language models (LLMs) and generative AI across diverse use cases. It furnishes REST API access to OpenAI’s potent language models, including the GPT-4, GPT-35-Turbo, and Embeddings model series.

# Dependent resources for Azure Machine Learning

resource "azurerm_application_insights" "default" {

name = "${random_pet.prefix.id}-appi"

location = azurerm_resource_group.default.location

resource_group_name = azurerm_resource_group.default.name

application_type = "web"

}

resource "azurerm_key_vault" "default" {

name = "${var.prefix}${var.environment}${random_integer.suffix.result}kv"

location = azurerm_resource_group.default.location

resource_group_name = azurerm_resource_group.default.name

tenant_id = data.azurerm_client_config.current.tenant_id

sku_name = "premium"

purge_protection_enabled = false

}

resource "azurerm_storage_account" "default" {

name = "${var.prefix}${var.environment}${random_integer.suffix.result}st"

location = azurerm_resource_group.default.location

resource_group_name = azurerm_resource_group.default.name

account_tier = "Standard"

account_replication_type = "GRS"

allow_nested_items_to_be_public = false

}

resource "azurerm_container_registry" "default" {

name = "${var.prefix}${var.environment}${random_integer.suffix.result}cr"

location = azurerm_resource_group.default.location

resource_group_name = azurerm_resource_group.default.name

sku = "Premium"

admin_enabled = true

}

# Machine Learning workspace

resource "azurerm_machine_learning_workspace" "default" {

name = "${random_pet.prefix.id}-mlw"

location = azurerm_resource_group.default.location

resource_group_name = azurerm_resource_group.default.name

application_insights_id = azurerm_application_insights.default.id

key_vault_id = azurerm_key_vault.default.id

storage_account_id = azurerm_storage_account.default.id

container_registry_id = azurerm_container_registry.default.id

public_network_access_enabled = true

identity {

type = "SystemAssigned"

}

}

Expedited Deployment through Terraform Modules

Terraform modules serve as repositories of configuration files, encapsulating sets of resources tailored for specific tasks. These modules streamline the development lifecycle by minimizing redundant code and expediting the deployment of AI services on Azure. The community-built Terraform module for deploying Azure OpenAI Service exemplifies this paradigm, offering swift provisioning capabilities within the Terraform ecosystem. Additionally, many modules catering to Microsoft Azure can be accessed via the Terraform Registry.

Commencing Your AI Journey

For those eager to embark on their OpenAI journey, leveraging pre-built modules like the Terraform module for Azure OpenAI is an expedient starting point. As familiarity with the module grows and unique requirements crystallize, customization can be undertaken to construct bespoke modules tailored to specific organizational needs. The provided code snippet exemplifies the seamless deployment of environments leveraging the GPT or DALL-E, contingent upon requisite approvals within your Azure subscription.

Azure AI Services: Empowering Organizational AI Scalability

As organizations strive to navigate the complexities of scaling AI within their operations, Azure AI Services emerges as a pivotal solution. Anchored by the Azure Machine Learning workspace, this top-tier resource provides a centralized platform for managing all artifacts related to Azure Machine Learning endeavors.

The Azure Machine Learning workspace serves as a repository for storing historical job data, including logs, metrics, outputs, and script snapshots. It also acts as a nexus for referencing crucial resources such as data stores, compute capabilities, and various assets like models, environments, and components. This centralized approach streamlines collaboration among team members, fostering the creation of machine learning artifacts and facilitating group-related work seamlessly.

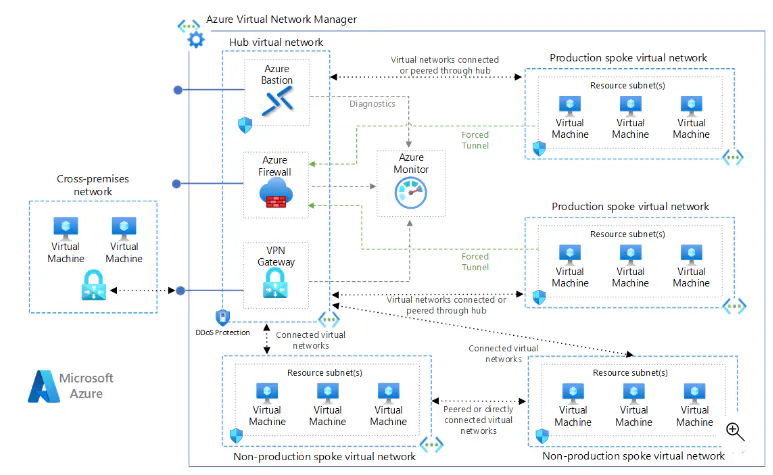

Facilitating AI Infrastructure Deployment with Hub-Spoke Network Topology

In network architecture, the Hub-Spoke model reigns supreme as a reference architecture for Azure deployments. This model entails a central hub-virtual network that serves as the nexus of connectivity for multiple spoke virtual networks. Spoke networks, in turn, connect with the hub, allowing for workload isolation and enabling cross-premises scenarios, including connections to on-premises networks.

Organizations can establish a robust foundation for deploying Azure AI services by leveraging the Hub-Spoke network topology. This architecture facilitates the creation of an “AI hub,” wherein services can be shared across multiple virtual networks, fostering collaboration, application development, and experimentation. Such an environment promotes the rapid sharing of resources, driving innovation and fostering the creation of novel business solutions.

Securing Azure Machine Learning Workspaces

To embark on the journey of secure Azure Machine Learning workspace deployment, organizations can rely on a comprehensive example provided by iSmile Technologies. Organizations can clone the repository by following a structured approach, initializing Terraform within the designated directory, and executing a build run to instantiate a fully secure hub-and-spoke Azure Machine Learning workspace.

Once configured, the Azure Machine Learning workspace provides a secure environment for data science endeavors. Organizations can securely connect to the Windows Data Science Virtual Machine (DSVM) by leveraging Azure Bastion. Equipped with pre-installed data science tools and configurations, the DSVM empowers data scientists to embark on their machine learning journey confidently and efficiently.

How ISmile Technologies Helps

ISmile Technologies is committed to assisting organizations in implementing cutting-edge AI solutions leveraging platforms like Microsoft Azure. By amalgamating expertise in AI technologies with robust implementation strategies, iSmile Technologies empowers businesses to harness AI’s transformative potential effectively.

ISmile Technologies is a trusted partner for organizations seeking to implement AI solutions on Microsoft Azure. By leveraging its expertise in AI technologies, adherence to best practices, and collaborative approach, iSmile Technologies enables clients to realize AI’s full potential in driving innovation, efficiency, and competitive advantage.

Conclusion

In collaboration with HashiCorp, Microsoft empowers organizations to navigate the complex terrain of AI adoption on Azure confidently and efficiently. Through the judicious utilization of Terraform and Azure AI services, businesses can surmount the challenges posed by AI deployment while fortifying security measures and ensuring regulatory compliance. As AI continues to permeate diverse industry verticals, the symbiotic partnership between HashiCorp and Microsoft serves as a beacon guiding organizations toward harnessing the transformative potential of AI within the Azure ecosystem.